MontereyBay.social experienced a minor degradation in service related to uploading certain types of videos. Here's the incident report, analysis, and resolution.

On Wednesday, December 21st at 21:19 UTC, MontereyBay.social received a report of video uploads failing. The reporter was attempting to upload a 20-second long video from their mobile device. They tried uploading from Mammoth, which showed a spinner for a long time, and from the official Mastodon app, which showed "Server Processing" for a long time, then a message of "Upload Failed The operation couldn’t be completed. (MastodonCore.AppError error 0.)".

Timeline

All events are documented in UTC time

- Dec 21 21:19 @paul - Responded to initial report and was able to reproduce the issue with videos recorded on his iPhone. Sidekiq queues were processing normally and there were no long-running jobs.

- Dec 22 02:03 @paul - Successfully uploaded three videos in "MP4 Base Media v1 [ISO 14496-12:2003]" format.

- Dec 22 02:XX @paul - ECS console logs contained a message showing that a Sidekiq service had been terminated due to an out of memory error. The service was automatically restarted.

- Dec 22 02:XX @paul - Manually changed the ECS Task Definition for the Sidekiq service from 0.25 vCPU/0.5 Gb memory to 0.5 vCPU/1 Gb memory (version 2) and updated the ECS service.

- Dec 22 03:40 @paul - Successful upload of iPhone videos that were previously failing to upload. The "Processing" phase of the upload still takes a long time.

Root Cause

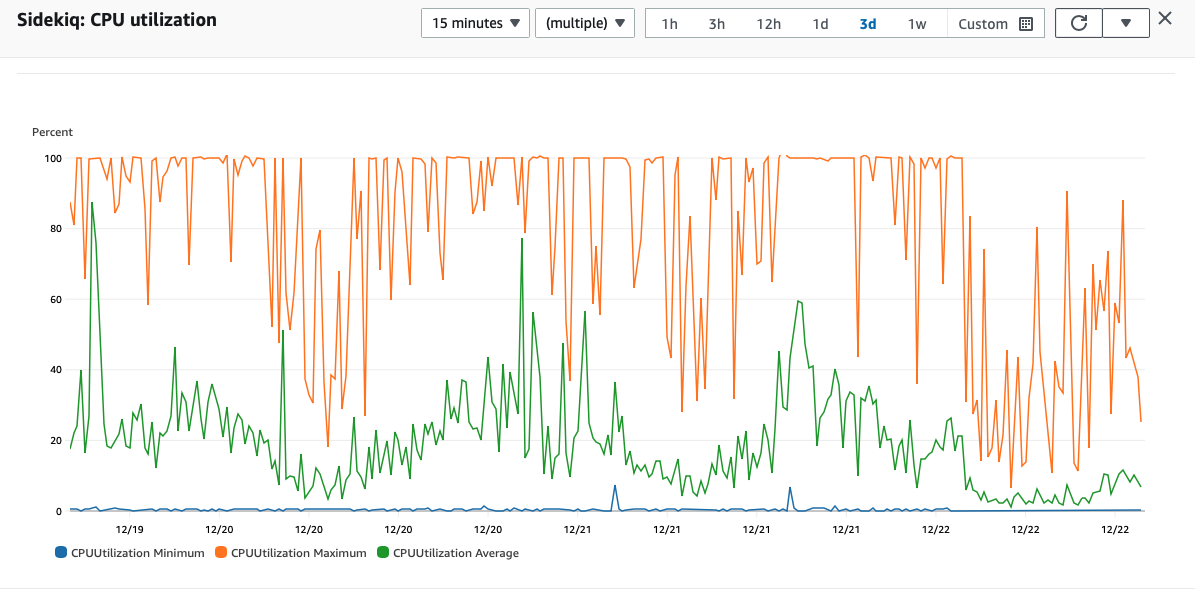

The cause of the upload failure was insufficient resources on the Sidekiq ECS service for processing certain types of videos. Over the past three days leading up to the report, the max CPU usage on the Sidekiq service container was hitting 100% during most peak periods:

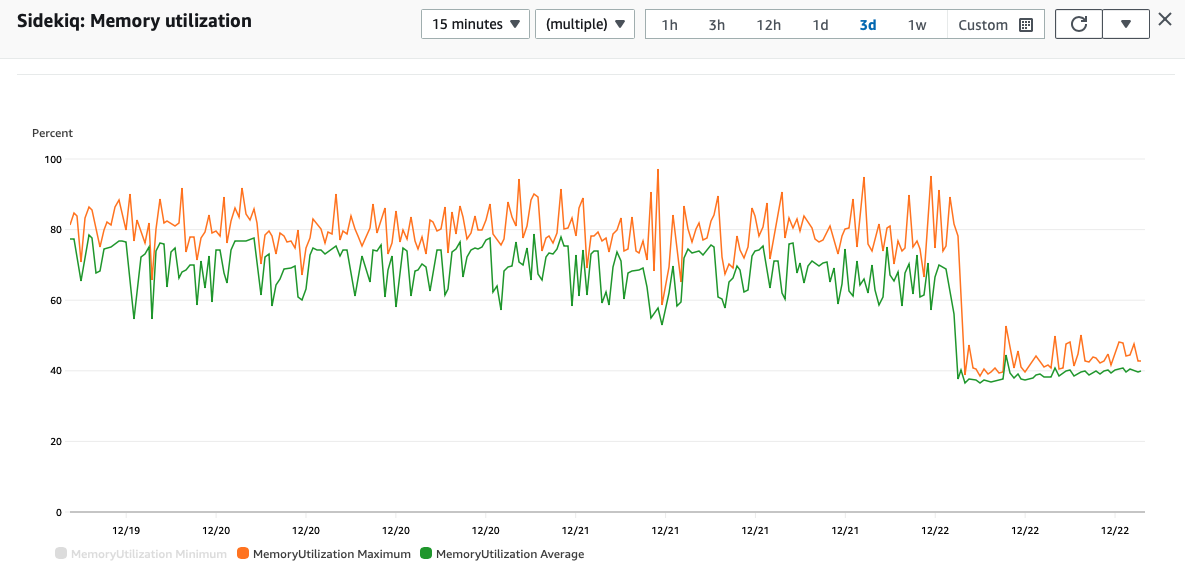

Memory utilization was also high, though not maxing out like the CPU:

@paul noticed that the Sidekiq service had terminated at some point in the past few days due to an OutOfMemoryError and automatically restarted (he did not note the timestamp of that event). He also saw a log message in that timeframe that contained an ffmpeg error. He hypothesized that ffmpeg takes more memory and CPU to transcode certain types of videos, and that increasing the resources available to the Sidekiq service would fix the immediate problem with uploading short videos.

Things that went well

@paul was able to reproduce the problem right away. Thanks to a recent work project that involved ECS and Fargate, he had very fresh experience in creating and modifying Task Definitions. He was able to make the required changes and deploy very quickly once the root cause was determined. Deploying a new Sidekiq container did not result in any service interruption.

Things that could be improved

We did not have a dashboard set up to monitor the basic operational metrics of the ECS cluster, so we did not know about the high resource usage until a member reported a failure. @paul deployed the new version of the Sidekiq task without knowing if it would cause downtime and without posting a notification on the Mastodon instance.

Opportunities

We should report the incident to widdix/mastodon-on-aws to share our learnings with other folks who are using the same configuration on AWS.

Resulting Actions

- Set up a CloudWatch dashboard that shows CPU and memory usage across all three services (web, Sidekiq, and streaming). [DONE]

- Set up CloudWatch alarms for high CPU and memory on all three services [TODO]